By Gerald E. Galloway, Ph.D., P.E., BC.WRE (Hon.), Dist.M.ASCE, F.ASCE, Lewis E. Link Jr., Ph.D., and Gregory B. Baecher, Ph.D., NAE, Dist.M.ASCE

The longstanding reliance on historical climate trends to help predict future weather-related events is no longer viable. Civil engineers must adapt to the new risks of flooding and coastal storms brought about by climate change and find new solutions.

The end of stationarity is a concept that civil engineers have speculated about ever since the topic was raised in the February 2008 Science article, “Stationarity Is Dead: Whither Water Management?” Climate stationarity refers to the fact that while weather conditions might vary over time, the overall climate remained statistically constant, enabling civil engineers to use historical climate trends to predict future conditions.

Although floods and coastal storms are among the most destructive natural hazards, the ability to forecast and plan for such events is waning due to climate change. The proverbial 100-year flood, for example, now returns more frequently, and the same is true for droughts, wildfires, and other weather-related hazards.

The new climate condition — let’s call it non-stationarity — is slowly but persistently making the statistical approaches of the past less useful.

So the key question today is: How can civil engineers plan for extreme events when the past is no longer a harbinger of the future?

Former flood frequency

Flood frequency has long been estimated from historical flows, which are considered statistical samples and representative of future floods. But despite its rigor, the analysis of statistical flood frequency has never been totally satisfactory because the record is often incomplete. Innovative ideas like paleohydrology — which is the examination of events that occurred in the distant past using sediment, vegetation, or geological indicators — have been exciting to civil engineers but seldom definitive.

Moreover, shifts in land use, demographics, and economic development have meant that the conditions leading to flooding and damage have always been changing.

One traditional approach to help predict flooding has been the use of the design flood, such as the probable maximum flood, which, according to the U.S. Code of Federal Regulations (40 CFR §257.53), is the “flood that may be expected from the most severe combination of critical meteorologic and hydrologic conditions that are reasonably possible in (a) drainage basin.” For example, in 1956, the design flood for the Mississippi River and Tributaries Project was based on a hypotheticalcombination of three historical storms, called “hypo floods,” from 1937, 1938, and 1950.

Another traditional approach has been the standard project storm and standard project flood. The standard project storm is the “relationship of precipitation versus time that is intended to be reasonably characteristic of large storms that have or could occur in the locality of concern,” according to the U.S. Army Corps of Engineers’ Hydrologic Engineering Center Hydrologic Modeling System (HEC-HMS) Technical Reference Manual. The standard project flood represents the runoff from the standard project storm and is used as one convenient way to compare levels of protection between projects, calibrate watershed models, and provide a deterministic check of statistical flood frequency estimates.

New risk realities

If in a world without stationarity it can no longer be assumed that the historical record is representative of future floods, what can be done? First, we can try to update current data and determine the degree of non-stationarity. Unfortunately, the existing record of hydrologic data is far from complete. The next move might be to adapt legacy statistical approaches — such as design floods — to suit the new reality, which usually results in amended or empirical relationships to compensate for the no-longer-valid assumption of stationarity.

One way to adapt an earlier statistical analysis is to modify its assumptions. Statistical parameters, for example, might be considered time-varying but updatable. Another approach is scenario analyses in which plausible futures are based on climate projections and anticipations of developments that are modeled to generate ensembles of weighted predictions. Unfortunately, many of these predictions consider scenarios that can be as much as 50 years in the future — making them, at best, little more than speculation.

Potential approaches

Although climate stationarity was helpful in predicting future storms and floods, it did not remove all uncertainty. So, engineers hedged against natural disasters by seeking approaches that performed well across a wide range of potential futures, even if those approaches were not optimal in any one scenario. At times, this hedging was accomplished by using conservative factors of safety or through worst-case planning. The aforementioned Mississippi River and Tributaries Project, for example, relied on quite conservative assumptions and an ensemble of mitigation mechanisms, including upstream reservoirs, backwater storage, channel improvements, and flow diversions.

The water community’s use of probable maximum or standard project flood metrics to guide decisions has been difficult to justify based on cost-benefit criteria. The Achilles’ heel of these deterministic approaches has been the inability to relate them to probability and risk and the difficulty of continually upgrading them as more information became available.

While hazard metrics can be updated, trying to continually update the mitigation measures is a different and more daunting task. For example, once a levee or floodwall is built to a specified height (what was thought to be the flood level), it is very difficult to adjust the height to a new one. In the case of levees, an increase in height also requires an increase in the width of the levee base.

An even more difficult challenge is upgrading existing infrastructure that was designed using criteria that are no longer adequate. The post-Katrina Hurricane and Storm Damage Risk Reduction System for New Orleans was designed using newly developed probability analyses as a substitute for worst-case thinking and legacy standards. This resulted in a conservative system that analytically considered a spectrum of conditions and hazard severities. Unfortunately, congressional language directed the Corps to use the 100-year-flood event as the basic design criterion, which imposed an unwarranted and unwise limitation on the robustness of the new system to meet future change.

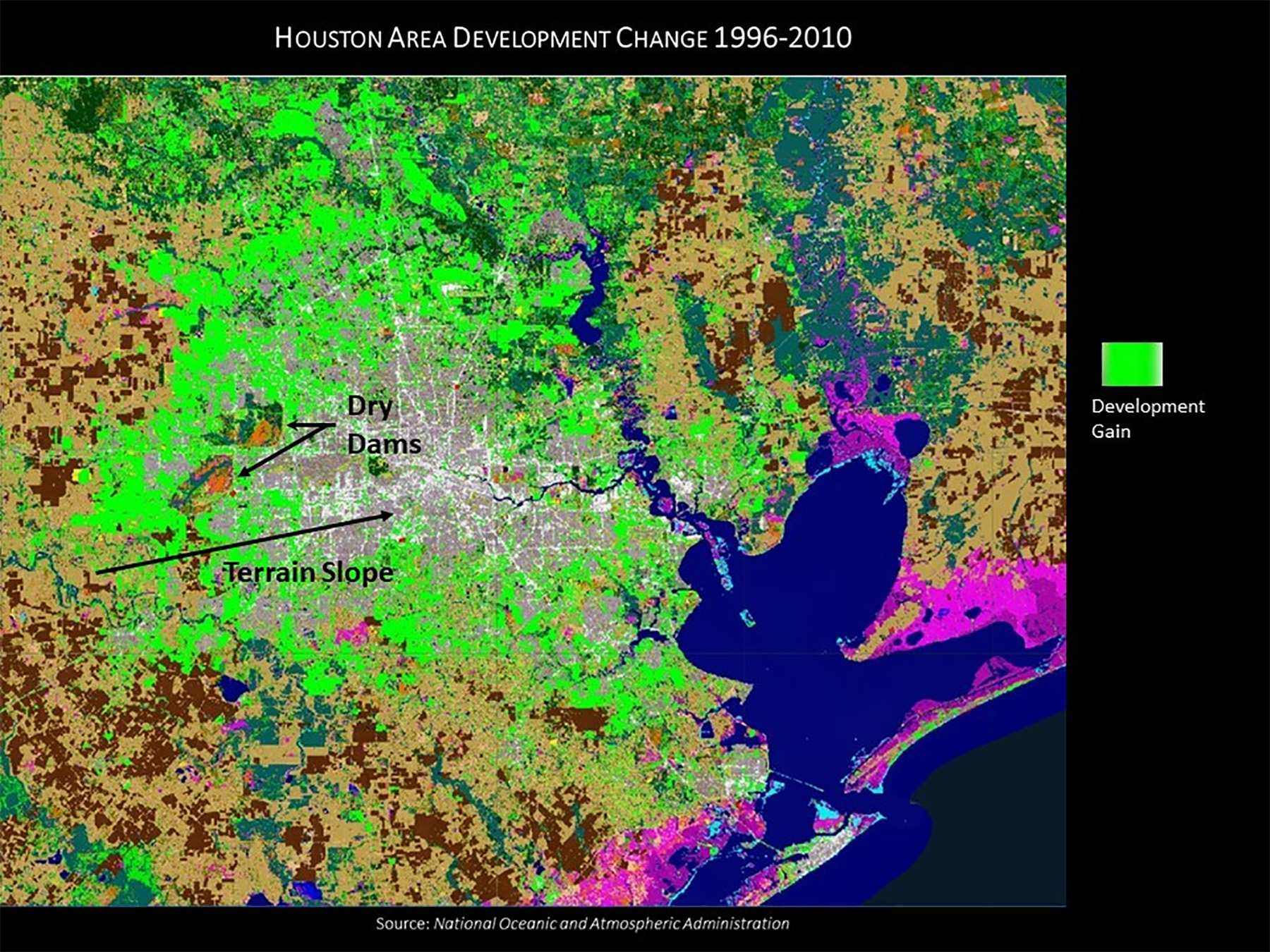

Acquiring the right data is an essential first step to help engineers adapt infrastructure systems in advance of a water crisis. For example, many of the low-lying areas that flooded in Houston following Hurricane Harvey in 2017 were not contiguous with channels or coasts and had not been identified as areas of risk in flood maps created by the Federal Emergency Management Agency. Yet 68% of the 154,170 homes that flooded in Harris County during Harvey were outside the 100-year FEMA floodplain, according to a report from the Harris County Flood Control District. So while mapping programs may record basic elevation data, the analysis to define all vulnerable areas or hazard levels seldom follows.

The principal drivers of the flood risks from non-stationarity may include land use and demography as much as climate change. Prior to hurricanes Katrina and Harvey, New Orleans and Houston experienced extensive urban expansions within the boundaries of their existing flood protection systems. Since risk reflects the amalgam of hazard probabilities and disaster consequences, engineers need to ask themselves whether their planning should focus on reducing consequences rather than mitigating hazards.

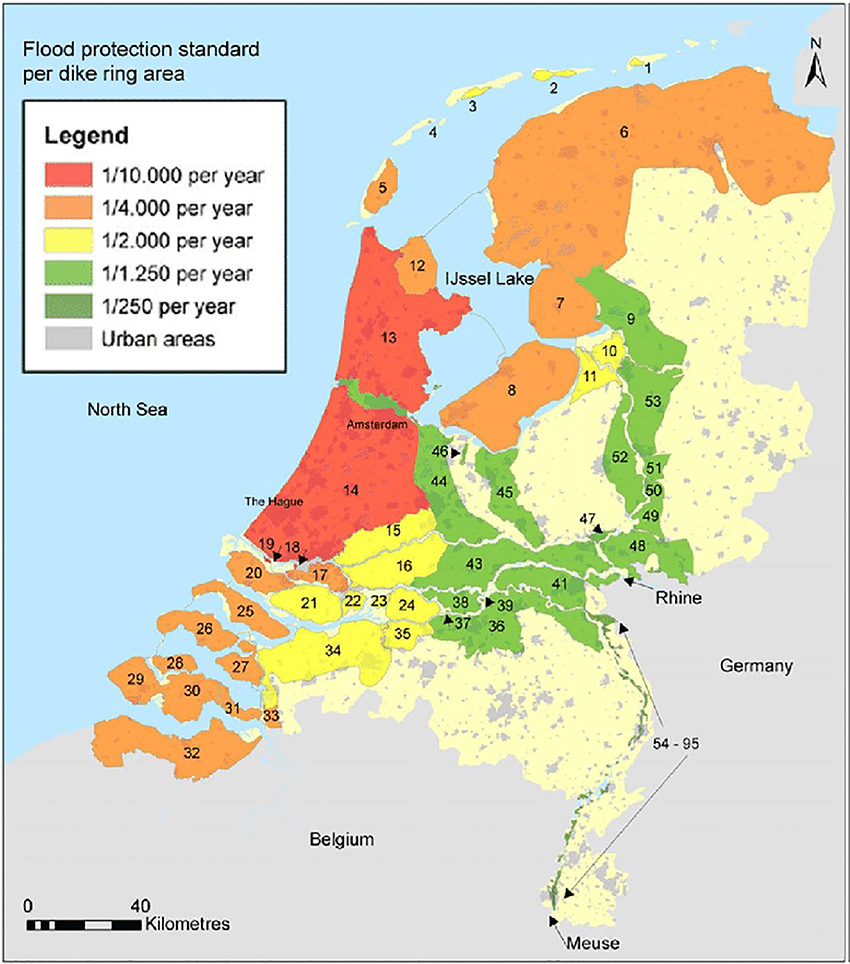

In the Netherlands, infrastructure planning has been augmented with scenario analyses and an expanded menu of goals such as social and environmental benefits. The country’s Delta Programme incorporates the scenario approach in order to develop a strategy for evolving flood management capabilities as conditions change. Scenario analyses drive the development of a series of incremental stages, each building on previous capabilities. This creates a long-term plan that is implemented as needed, with the goal of addressing change as it occurs.

According to the July 2016 report “On the Front Lines of Rising Seas: US Naval Academy, Maryland” from the Union of Concerned Scientists, the Naval Academy in Annapolis experiences periodic tidal flooding, and by 2070 these flood-prone areas could be underwater 85% of the time, affecting up to 10% of the academy’s land area. Furthermore, by the end of the century, Category 2 hurricanes could expose a third of the academy to a 5-10 ft deep storm surge. Although sea-level rise forecasts reach to the end of the century, providing protection from now until 2100 is a challenge due to financing. Given fiscal limitations, the Naval Academy has prioritized projects that incorporate near-term predictions, placing future construction in a wait-and-see situation.

Paths forward

There are many suggestions for handling non-stationarity, but most remain rooted in historical data. The spectrum of government and private practices suggests a small range of policy alternatives, all of which focus on knowledge inadequacies rather than the randomness of natural events. But this approach requires an overhaul of planning philosophy, from estimating the likelihood of event frequency and severity as properties of nature to basing uncertainty on the adequacy of models, data, and analyses.

In the vernacular of risk analysis, this means transitioning from a reliance on aleatory uncertainties based on natural randomness to epistemic uncertainties based on information and understanding. It is a transition that has already matured in the field of earthquake engineering. Now we need to bring it to flood risk reduction through the following measures:

Planning without probabilities. If the future cannot be reliably forecast, then it should not be tried. Historical data can be used as a launching pad to explore possible future scenarios, developing strategies as change occurs, as in the Delta Programme. Likewise, the design flood for the Lower Mississippi River, as part of planning the Mississippi River and Tributaries Project, was an early, less sophisticated version of this strategy.

A scenario approach, however, is vulnerable to “too-small” thinking, exploring only changes that appear realistic rather than extreme conditions. Therefore, scenarios coupled with incremental adaptive planning could be the current gold standard to shoot for.

Planning to mitigate damages, not hazards. The geographer Gilbert White, considered the father of flood management, according to the Association of American Geographers, famously said: “Floods are ‘acts of God,’ but flood losses are largely acts of man.” This may be a key to future flood risk planning. Changes in population, land use, and terrain may be more predictable than changes in natural hazards. So although policy interventions in those human-focused factors are often difficult, they may offer the best chance for mitigating the impacts of natural disasters. More effective land use and management practices prior to Hurricane Harvey, for instance, could have mitigated a considerable amount of the losses in the Houston region. What’s more, such measures could have limited development in areas above the dry reservoirs upstream from Houston and in low-lying areas below the dry dams.

Adaptive thinking. Because flood-control policies may be barriers to change, adaptive management and life-cycle design will likely be critical in the future. As a result, policies — rather than statistics — will lead the way, especially those that enable flexibility and the evolution of guidance and decision criteria. For example, the idea of designing a single solution for a specific “project life” will no longer be viable. Instead, the life cycle must address the future beyond 50 years.

Future policies will need to embrace incremental adaptations as well as broader spectrums of criteria that include societal, environmental, and economic factors. Cost-benefit analysis as currently applied — which clings to legacy criteria such as the 100-year event — is woefully inadequate for the present, let alone the future. New approaches — such as the project for flood reduction, hurricane and storm damage mitigation, and ecosystem restoration along the entire Texas coast, known as the Coastal Texas Protection and Restoration Feasibility Study — were set up in response to Hurricane Harvey. These feature an encouraging mix of old and new: Scenarios will be used to support planning, and nature-based components will be used along with traditional structural barriers.

Rethinking the 100-year flood. An unfortunate impediment to progress on flood risk reduction is Congress’s continued fixation on the 100-year flood as a key project criterion. When the U.S. adopted the 100-year flood as part of the National Flood Insurance Program, it eventually displaced the standard project flood as the de facto national standard for flood risk. But this was done without a broad consideration of its impact on flood protection. The 100-year event should no longer be treated as the standard for flood risk.

A need for action

By most accounts, we are in a transition period, with a window of time in which to further develop new approaches and more adaptive policies. But that window will close.

Unfortunately, no single federal agency in the U.S. is charged with coordinating national water issues. Indeed, back in 2009, Rep. James L. Oberstar, then chair of the House Committee on Transportation and Infrastructure — which prepares the biennial Water Resources Development Act — reported to a group of water experts in Washington, D.C., that there were 24 federal agencies with water responsibilities, as well as a number of land management agencies with related responsibilities.

Oberstar stated: “(T)he diverse water resources challenges throughout the United States are often studied, planned, and managed in individual silos, independently of other water areas and projects.” This often leads to “local and narrowly focused project objectives with little consideration of the broader watersheds that surround the project.”

Sadly, nothing has changed in the intervening 15 years.

What's next?

To start, the authors believe there needs to be a government-wide coordinated effort to manage national water issues in general and flooding in particular. It is time for Congress to eliminate the siloed treatment of water issues and restore funding to the Water Resources Council, which was created in 1965 and chartered to assess the adequacy of water supplies for the U.S. as a whole and in each region of the nation, and to make recommendations to the president regarding federal policies and programs, among other tasks. The Council was defunded during the Reagan administration but remains in existence for administrative purposes.

The engineering community should also encourage water agencies at the state level to work as partners with the federal government. The 2015 opening of the Office of Water Prediction and the National Water Center within the National Oceanic and Atmospheric Administration has initiated efforts to create national water models; to improve the quality, timeliness, and accuracy of such products; and to serve as an innovation incubator and research accelerator for the water community. These new bodies offer a glimpse into what could arise from coordinated federal-state cooperation.

A reenergized Water Resources Council and new government organizations like OWP will help unify disparate efforts now underway. They might also slowly eliminate overlaps and gaps in the national water effort. Toward these goals, the authors feel the Water Resources Council should initially concentrate on the following actions:

- Better define and quantify the non-stationarity effect. Until we have a better understanding of how non-stationarity is influencing current analyses and decisions (and which sources of non-stationarity are dominant), it is difficult to chart a path forward for policy or practice.

- Expand collaboration on non-stationarity flood risk. Pilot projects should be undertaken in which traditional and transitional approaches are applied, such as the Coastal Texas Protection and Restoration Feasibility Study. In 2020, Congress directed development of comprehensive plans to identify the actions needed for the Lower Mississippi River basin to manage the region for flood risks, navigation, ecosystem restoration, water supply, hydropower, and recreation.

- Examine policy-related barriers to new adaptive planning methods. Antiquated budgetary procedures that fail to acknowledge the social and environmental benefits of contemporary project designs need close scrutiny, as do unnecessary congressional interventions in project designs and funding streams. This examination should also focus attention on the consequences of a slow transition of flood risk policy.

- Evaluate the dangers of perpetuating the 100-year-flood mentality and its impact on project design and risk definition. Given the continual hydrologic and anthropogenic changes, the 100-year flood is a moving target; therefore, considering it as an example of stationarity only worsens the flood risk problem.

Civil engineers must be in the middle of the effort to find solutions and define a path forward. They cannot wait for project plans to be dropped on them, but instead they must participate at all levels in the political and scientific discussions that will shape the future. With its tremendous knowledge base, the engineering profession must educate its members to better understand the issues and encourage them to enter the fray.

Gerald E. Galloway, Ph.D., P.E., BC.WRE (Hon.), Dist.M.ASCE, F.ASCE, is a professor emeritus in the Department of Civil and Environmental Engineering at the University of Maryland.

This article first appeared in the July/August 2024 issue of Civil Engineering as “Flood Risk without Stationarity.”